Misinformation spreads fast on social media, often outpacing the truth. It has become so ingrained in our daily online experience that we barely question it anymore, and 2024 was a particularly big year for misinformation.

Leading up to the 2024 presidential election, false claims circulated that Haitian migrants were eating pets, claims that were entirely fabricated first on social media and then spread to the real world. And this was just one of many.

To combat misinformation, platforms have implemented various fact-checking systems to verify content, flag false claims, and limit the spread of misleading posts. But how does fact-checking actually work? And is it effective in 2025? Let’s break it down.

1. How Fact-Checking Works on Social Media

Fact-checking on social media serves a crucial purpose, to prevent the spread of false or misleading information. With billions of users sharing content daily, platforms rely on a combination of human oversight and AI-powered tools to identify and address misinformation.

Here’s why fact-checking is essential:

- Curbing Misinformation: False claims, manipulated images, which we have seen many with the rise of AI, and deepfakes can go viral quickly, misleading the public. Fact-checking helps slow their spread.

- Protecting Public Discourse: Health issues and global events are common targets for misinformation. Verified information ensures informed discussions.

- Maintaining Platform Integrity: Social media companies face pressure from governments and users to tackle fake news. Fact-checking helps them maintain credibility.

But how do platforms determine what’s true and what’s false?

2. How Social Media Platforms Fact-Check Content

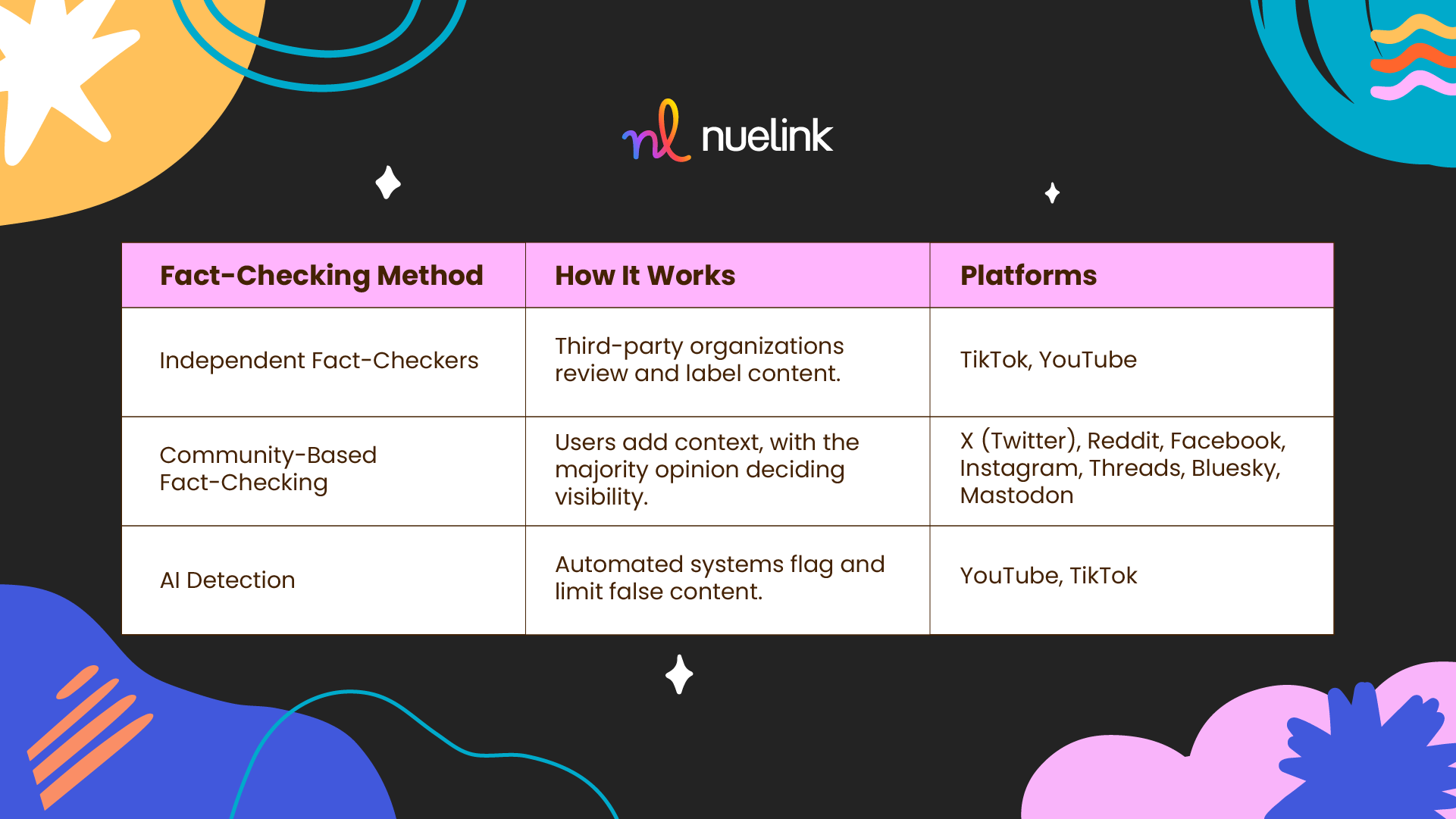

Social media platforms have developed different approaches to fact-checking. These methods vary across platforms, but most rely on a combination of third-party fact-checkers, automated detection systems, and user-driven moderation.

Independent Fact-Checking Organizations

Many platforms partner with third-party fact-checking organizations that assess the accuracy of viral claims. These fact-checkers operate independently and follow established verification standards.

Facebook and Instagram previously collaborated with fact-checking partners across various regions but, as you know, they have recently changed course to the displeasure of many.

When a piece of content is rated false, platforms taking this approach apply warning labels and limit its reach in users’ feeds. TikTok follows a similar process, flagging content that has been reviewed by external fact-checkers and preventing it from appearing in recommendations.

Community-Based Fact-Checking

Some platforms rely on user-driven fact-checking rather than external organizations. X introduced Community Notes, a system where users can add context to posts by citing credible sources.

These notes are only displayed if multiple contributors with different viewpoints rate them as helpful. This approach differs from traditional fact-checking by decentralizing the process and relying on community consensus rather than expert evaluation.

All Meta platforms have also adopted this approach since early this year. While this allows for flexibility, it’s still deeply flawed and often unreliable.

Automated Detection Systems

Many platforms use artificial intelligence to detect misleading content before it spreads. These systems analyze text, images, and video for patterns associated with false claims.

YouTube, for instance, uses AI to surface authoritative sources in search results and flag misleading thumbnails or video titles. TikTok employs automated moderation to identify potential misinformation, although final decisions often involve human review. AI fact-checking is still evolving, and false positives remain a challenge.

3. The Battle Between Free Speech and Moderation

Social media platforms operate at the center of an ongoing debate: how to balance free expression with content moderation? While these platforms provide a space for open discussion, they also enforce policies to limit harmful content.

The tension between these two priorities has led to disputes over censorship, misinformation, and the role of private companies in shaping public discourse.

Social media companies often set their own rules on acceptable speech, often guided by legal requirements, advertiser expectations, and user safety concerns. Platforms like Facebook, YouTube, and TikTok enforce policies against hate speech, to be fair Facebook rules did loosen a bit too much lately, harassment, and misinformation.

X has shifted toward a more hands-off approach, reducing enforcement in favor of user-driven fact-checking and transparency labels. Moderation policies vary across platforms, leading to accusations of inconsistency.

Some critics argue that enforcement is arbitrary, and they will be correct. Others believe platforms do not do enough to curb harmful content, particularly in cases of coordinated disinformation campaigns or targeted harassment, and they are correct too.

Governments have taken different approaches to content moderation. In the U.S., Section 230 of the Communications Decency Act protects platforms from legal liability for user-generated content while allowing them to set their own moderation policies.

This has led to debates over whether platforms should be considered neutral hosts or editorial entities that shape discourse through content removal and algorithmic ranking.

In contrast, countries like Brazil have enacted stricter laws requiring platforms to remove illegal content within specific timeframes. X did get banned last year for not complying after all.

It seems that the challenge for platforms is finding a balance between fostering open discussion and preventing harmful speech. Overly strict moderation can stifle legitimate discourse, while too little oversight can allow misinformation and harmful content to spread unchecked like wildfire.

4. What’s the Alternative?

As debates over free speech and moderation continue, different models for content governance are emerging. While mainstream platforms rely on a combination of internal policies, automated enforcement, and external pressure, alternative approaches aim to decentralize control, increase transparency, or shift moderation decisions to users.

Decentralized Platforms

One proposed alternative is decentralization, moving away from corporate-controlled moderation to user-driven governance. Platforms like Bluesky and Mastodon operate on federated networks, where moderation policies vary by community.

Instead of a single authority enforcing rules, different servers, or instances, set their own guidelines, allowing users to choose what aligns with their preferences.

However, smaller communities may lack the resources to handle moderation effectively, leading us back to square one.

Algorithmic Transparency and User Control

Some platforms are experimenting with increased transparency and user control over content moderation.

Another approach is letting users customize their content feeds based on moderation preferences. This could mean allowing users to opt into different content policies, similar to how parental controls function on streaming services. While this provides more autonomy, it also raises concerns about echo chambers.

Legal and Regulatory Solutions

Governments are also considering regulatory alternatives. The EU’s Digital Services Act (DSA) requires platforms to disclose how their moderation systems work and gives users more options to challenge content removal decisions.

However, government-led solutions often face resistance due to concerns about overreach, censorship, and conflicts with free speech laws in different countries, and for good reason.

Community-Led Moderation

Some alternative models involve community-led moderation, where content rules are enforced by a network of users rather than a centralized team. Reddit’s moderation system is an example, each subreddit has its own volunteer moderators who enforce rules specific to that community.

This model allows flexibility but can also lead to inconsistency, favoritism, or the dominance of certain viewpoints.

No single alternative has proven to be a perfect solution. The future of content moderation will likely involve a mix of the above. The effectiveness of these efforts will depend on their ability to scale, maintain accuracy, and gain public trust.

Regardless of how platforms handle moderation, tools like Nuelink can help streamline content management across multiple channels.